Clinical Trial Data vs Real-World Evidence: Key Differences Explained

Clinical Trial vs RWE Cost and Timeline Calculator

Compare Clinical Trial and RWE Costs & Timelines

Enter the expected duration of your clinical trial to see how real-world evidence compares.

Current Phase III average: 3 years

Clinical Trial

Estimated cost: $19 million

Duration: 3 years

Strengths: High internal validity, clear causal inference

Limitations: Limited generalizability, expensive and slow

Real-World Evidence

Estimated cost: $4.75 million

Duration: 9 months

Strengths: High external validity, reflects real-life effectiveness

Limitations: Potential bias, data quality issues, confounding

Key Insights

Cost Savings: Real-world evidence studies typically cost 60-75% less than clinical trials.

Time Savings: RWE studies finish in 6-12 months versus 24-36 months for Phase III trials.

Best For: RCTs for initial efficacy/safety, RWE for post-market surveillance and label expansions.

Quick Takeaways

- Clinical trials answer "does it work under ideal conditions?" while real‑world evidence (RWE) answers "does it work in everyday practice?".

- RCTs have high internal validity but limited external validity; RWE offers broader population coverage but faces data‑quality challenges.

- Costs differ dramatically - a Phase III trial averages $19 million over 2‑3 years, whereas most RWE studies finish in under a year at 60‑75% lower cost.

- Regulators now accept RWE for post‑market safety and even for conditional approvals, but they still rely on RCTs for initial efficacy.

- Hybrid designs that combine both approaches are emerging as the best way to balance rigor and relevance.

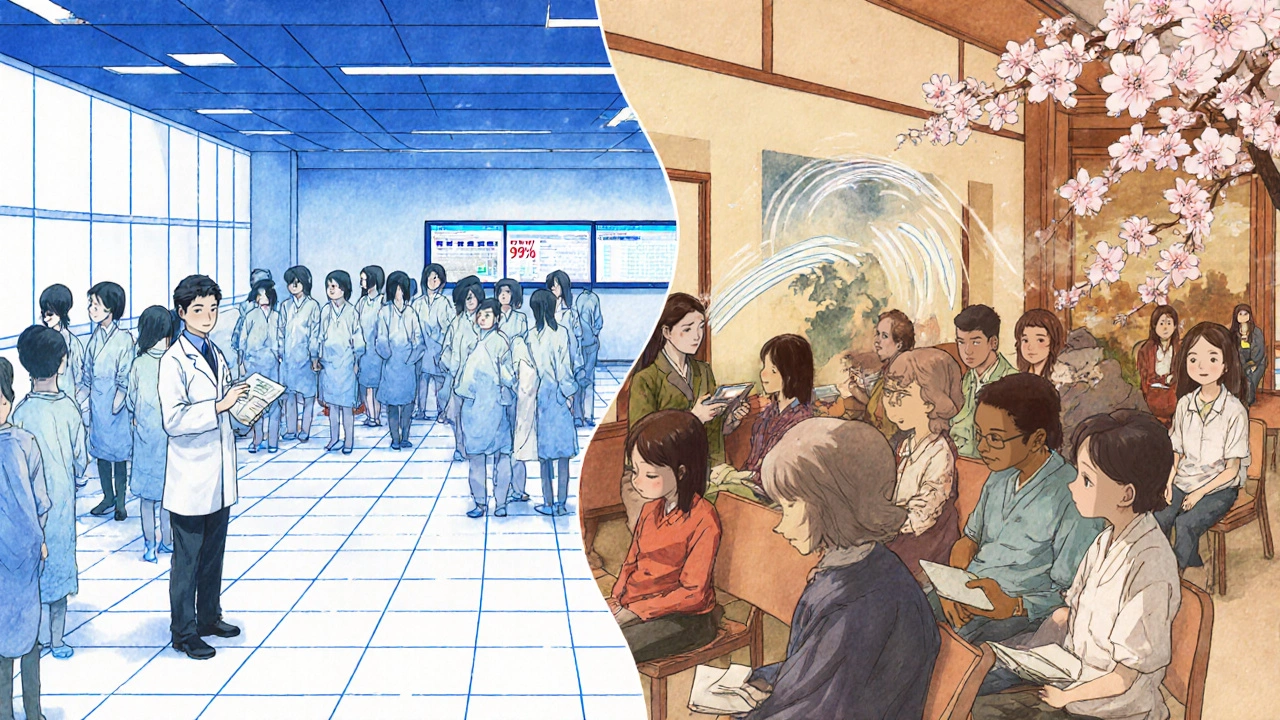

When a new drug hits the market, doctors, payers, and patients all want to know two things: will it work, and will it work for *my* situation? The answer comes from two data streams that look similar but are built on very different foundations. Below we break down the most important differences between clinical trial datainformation collected from carefully controlled experiments that test a therapy’s efficacy and safety under predefined conditions and real-world evidence, the insights drawn from everyday clinical practice.

What Exactly Is Clinical Trial Data?

Randomized Controlled Trial (RCT)the gold‑standard study design where participants are randomly assigned to treatment or control groups to minimise bias is the engine behind most clinical trial data. Since Sir Austin Bradford Hill formalised randomisation in the 1940s, regulators have required that a new therapy demonstrate efficacy in an RCT before granting approval. This means strict inclusion and exclusion criteria, regular monitoring visits, and a predefined schedule for data collection (often every 3 months for a Phase III trial).

The upside is clear: randomisation, blinding, and protocol adherence protect against confounding factors, giving the results high internal validity. The downside? The trial population often looks nothing like the patients you see in a community clinic. Studies show that up to 80 % of real‑world patients would be excluded because of comorbidities, age, or socioeconomic barriers.

What Is Real‑World Evidence?

Real‑World Evidence (RWE)findings derived from data collected outside the controlled environment of clinical trials, such as electronic health records, insurance claims, registries, and digital health devices has exploded over the past decade as health systems digitised. The U.S. FDA recognized its potential in the 21st Century Cures Act (2016), and today the agency’s Sentinel Initiative watches over 300 million patient records for post‑market safety signals.

RWE captures how a drug performs across diverse ages, ethnicities, and disease stages - the exact groups that clinical trials often leave out. Sources include electronic medical records (EMRs), claims databases (e.g., Optum, IQVIA), patient registries, and wearables that continuously stream physiologic data.

Side‑by‑Side Comparison

| Aspect | Clinical Trial Data | Real‑World Evidence |

|---|---|---|

| Design | Prospective, randomised, often blinded | Observational, retrospective or prospective, no randomisation |

| Population | Highly selected, ~20 % of eligible patients meet criteria | Broad, includes comorbidities, elderly, under‑represented minorities |

| Data completeness | ~92 % completeness for primary endpoints | ~68 % completeness; missing visits and labs are common |

| Timeline | 24‑36 months for Phase III | 6‑12 months from data pull to analysis |

| Cost | ~$19 million average per Phase III | 60‑75 % cheaper; often under $5 million |

| Regulatory weight | Primary basis for FDA/EMA approval | Supplementary for post‑approval safety, label expansions, and conditional approvals |

| Strengths | High internal validity; clear causal inference | High external validity; reflects real‑life effectiveness |

| Weaknesses | Limited generalisability; expensive and slow | Potential bias, data quality issues, confounding |

Methodological Challenges and How They’re Solved

Because RWE lacks randomisation, analysts rely on statistical tricks to mimic the balance RCTs create. One of the most common tools is propensity score matchinga method that pairs treated patients with untreated controls who have similar observable characteristics. This reduces confounding, but it can’t adjust for variables that weren’t captured in the source data.

Clinical trials, on the other hand, invest heavily in protocol enforcement - site monitoring, source‑document verification, and strict timing of visits. That rigor drives the 92 % data‑completeness rate reported in a 2024 Scientific Reports analysis of diabetic kidney disease studies.

Hybrid designs are now trying to get the best of both worlds. For example, the FDA’s 2024 draft guidance on hybrid trials recommends using an RCT backbone for the primary efficacy endpoint while layering RWE on safety and long‑term outcomes. This approach shortens timelines and lowers costs without sacrificing the causal clarity of randomisation.

Regulatory Landscape - Who Accepts What?

The FDA’s stance has shifted dramatically. In 2015, only one drug was approved with any RWE component; by 2022 that number rose to 17, covering oncology, rare diseases, and cardiovascular therapies. The European Medicines Agency (EMA) is even more aggressive: 42 % of its post‑authorization safety studies in 2022 incorporated real‑world data, compared to 28 % at the FDA.

Nevertheless, regulators still view RCTs as the gold standard for initial safety and efficacy. Former FDA commissioner Dr. Robert Califf testified that RWE can “complement” but not replace RCTs for first‑in‑human decisions.

Economic Impact - What Do the Numbers Say?

The global RWE market was valued at $1.84 billion in 2022 and is projected to hit $5.93 billion by 2028 (CAGR 21.5 %). By contrast, the traditional clinical‑trial market grows at only 6.2 % annually. Payers are also demanding RWE: a 2022 Drug Topics survey found 78 % of U.S. payers now require real‑world cost‑effectiveness data before adding a drug to formularies.

Therapeutic areas differ. Oncology leads the pack, with 45 % of RWE studies focused there because placebo‑controlled trials are often impractical. Rare diseases follow at 22 %, where small patient numbers make RCTs nearly impossible.

Future Trends - Where Are We Headed?

Artificial intelligence is turning raw electronic health records into predictive models. Google Health’s 2023 study showed AI could forecast treatment response with 82 % accuracy, edging out traditional RCT‑based analyses at 76 %.

At the same time, data‑quality initiatives like the VALID Health Data Act (2022) and the NIH’s HEAL Initiative (2021) are tightening standards for RWE reproducibility. Expect more hybrid trials, tighter governance, and wider acceptance of RWE in label expansions over the next few years.

Practical Tips for Researchers and Decision‑Makers

- Start with a clear question: are you looking for efficacy (use RCT) or effectiveness (use RWE)?

- If you choose RWE, invest in data‑quality checks - audit completeness, verify timestamps, and apply propensity‑score methods where feasible.

- Consider a hybrid design early. Embedding an RCT within an existing registry can cut costs by up to 25 % while preserving randomisation.

- Engage regulators early. Submit a data‑quality plan alongside your RWE protocol to the FDA’s Sentinel or EMA’s Adaptive Pathways teams.

- Document every analytic decision. Transparency is the biggest predictor of reproducibility in RWE studies.

Frequently Asked Questions

What is the main advantage of clinical trial data?

Clinical trial data provides high internal validity because randomisation and blinding minimise bias, allowing a clear causal link between a treatment and its outcome.

Why do regulators now accept real‑world evidence?

Regulators recognise that RWE fills gaps left by trials - it shows how drugs perform across broader populations, informs safety monitoring, and can speed up label expansions when RCTs are impractical.

Can I replace a Phase III trial with real‑world data?

Not for initial approval. Agencies still require RCTs to establish efficacy and safety. RWE is best used after approval for post‑market surveillance or to support additional indications.

What statistical methods help reduce bias in RWE?

Techniques like propensity‑score matching, inverse‑probability weighting, and instrumental variable analysis are commonly applied to create comparable treatment groups.

How long does it take to conduct a real‑world study?

Most RWE projects finish within 6‑12 months, depending on data access, cleaning, and analysis complexity, which is much faster than the typical 24‑36 months for a Phase III trial.

Jacqueline Galvan

November 1, 2025 AT 13:26Thank you for presenting such a comprehensive overview of clinical trial data and real‑world evidence. The distinction between internal and external validity is particularly clear in your side‑by‑side table. It is encouraging to see the emphasis on hybrid designs that aim to balance rigor with relevance. Researchers can appreciate the detailed cost comparison, which highlights the financial pressures on drug development. The discussion of propensity‑score matching provides a useful reminder of statistical tools available to mitigate bias in observational studies. Your enumeration of regulatory trends across the FDA and EMA adds valuable context for stakeholders. The inclusion of AI‑driven predictive models underscores the evolving nature of evidence generation. Moreover, the practical tips section offers actionable guidance for investigators embarking on new studies. The article also wisely points out that while RWE cannot replace RCTs for initial approvals, it is indispensable for post‑market surveillance. The clear articulation of strengths and weaknesses for each approach helps readers quickly grasp the trade‑offs. I was particularly impressed by the citation of recent surveys indicating payer demand for real‑world cost‑effectiveness data. Highlighting therapeutic area differences, such as oncology’s leading role, adds depth to the analysis. The reference to the VALID Health Data Act demonstrates awareness of ongoing policy developments. Overall, the piece maintains a formal yet accessible tone throughout. It successfully integrates statistical, regulatory, and economic perspectives. Readers are left with a nuanced understanding of how to leverage both data streams effectively. I look forward to seeing more research that adopts the hybrid model you describe.

Tammy Watkins

November 13, 2025 AT 03:13While the original post masterfully outlines the landscape, it is imperative to stress the urgency of adopting hybrid trials now; the regulatory climate is rapidly evolving, and delays could jeopardize competitive advantage. One must recognize that the demonstrable cost savings are not merely theoretical but have been realized across multiple recent oncology studies. The integration of real‑world data into early‑phase protocols can dramatically accelerate patient recruitment, especially for rare diseases. Moreover, the statistical rigor afforded by contemporary propensity‑score techniques should reassure any lingering skeptics. In light of these facts, I assert that the industry must act decisively, lest we fall behind global innovators. The evidence presented is compelling, and it demands immediate implementation.

Casey Morris

November 24, 2025 AT 17:00Indeed, the article, with its thorough exposition, offers a nuanced perspective, however, one might argue, perhaps, that the reliance on RWE could, in certain contexts, introduce confounding variables, especially when data completeness hovers around 68 %, which, while acceptable, does raise questions regarding the robustness of conclusions; furthermore, the cost differential, though impressive, should be weighed against potential biases inherent in observational designs, and the regulatory acceptance, though expanding, remains contingent upon rigorous methodological standards, which, admittedly, are evolving at a commendable pace.

Teya Arisa

December 6, 2025 AT 06:46Excellent points raised above, and I would like to add that the collaborative spirit between academia and industry can further enhance data quality 😊. By establishing clear governance frameworks and employing standardized data models, we can mitigate many of the shortcomings highlighted. It's reassuring to see regulators becoming more flexible, which paves the way for innovative study designs 🚀.

Doreen Collins

December 17, 2025 AT 20:33Building on the insightful discussion, it's clear that supportive coaching can bridge the gap between rigorous trial methodology and pragmatic real‑world analysis. Concise: data transparency is key. Long‑wound thoughts: when we consider the myriad of patient pathways, the variability becomes both a challenge and an opportunity, inviting us to refine our analytic techniques while remaining empathetic to patient experiences.

Dawn Bengel

December 29, 2025 AT 10:20Frankly, the over‑reliance on RWE feels like a shortcut that undermines scientific integrity 😠. While cost savings are tempting, we must not sacrifice methodological rigor for budgetary concerns. The healthcare community deserves data that is both affordable and trustworthy, not just cheap approximations 😊.

junior garcia

January 10, 2026 AT 00:06Great read! Simple facts: RCTs give us clear cause‑and‑effect, RWE shows us real life. Both are needed, and together they tell the full story.

Dason Avery

January 21, 2026 AT 13:53What a fascinating convergence of philosophy and science! By blending the certainty of controlled experiments with the messy truth of everyday practice, we approach a more holistic understanding of treatment impact. Keep pushing the boundaries, and remember: the best evidence often lies somewhere in between 🤔.

Kathryn Rude

February 2, 2026 AT 03:40Hmm interesting point but maybe overhyped? The data hype often overshadows nuance. Still, an integrated view is promising :)

Lindy Hadebe

February 13, 2026 AT 17:26The article repeats known facts without offering fresh insight.